VPC endpoints connect your AWS Virtual Private Cloud (VPC) to AWS services securely without using the public internet. Problems with these connections often arise from misconfigurations in DNS settings, security groups, NACLs, routing, or resource limits. Here's what you need to know to quickly identify and fix these issues:

Key Steps to Troubleshoot:

- Check Endpoint Status: Use the AWS Console or CLI to confirm the endpoint is "Available."

- Verify Routing: Ensure route tables direct traffic to the endpoint.

- Review Security Groups and NACLs: Allow HTTPS (port 443) and ephemeral ports (e.g., 32768–65535 for Linux).

- Test DNS Settings: Confirm private DNS resolves to the endpoint's IP.

- Inspect ENI Quotas: Ensure you haven’t exceeded Elastic Network Interface limits.

Common Problems:

- Security Issues: Blocked traffic due to restrictive rules in security groups or NACLs.

- Routing Errors: Missing or incorrect routes in subnet route tables.

- DNS Misconfigurations: Traffic bypassing the endpoint due to improper DNS setup.

- ENI Limits: Endpoint creation fails if Elastic Network Interface quotas are exceeded.

Tools to Use:

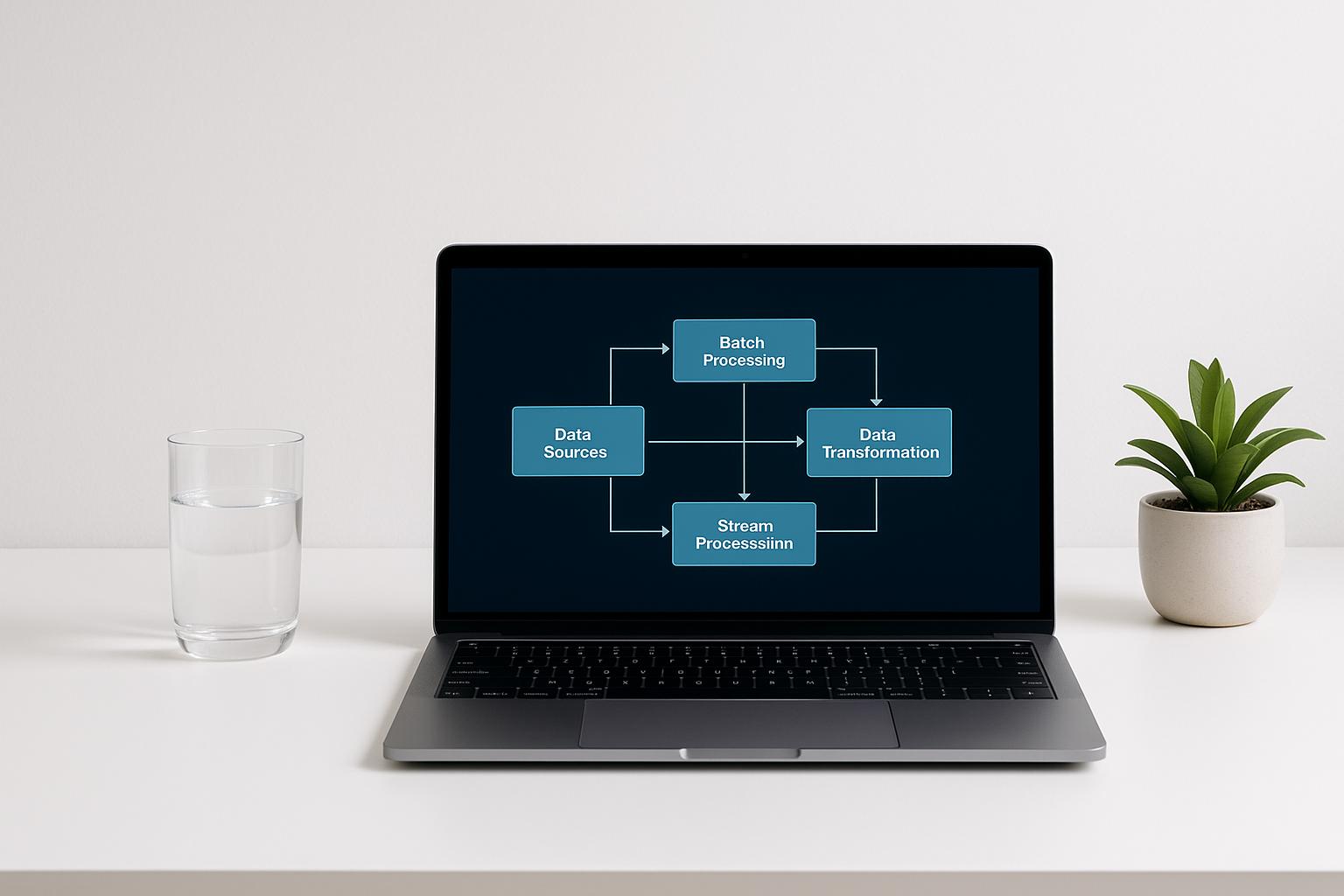

- AWS CLI: Check endpoint status and troubleshoot failures.

- VPC Flow Logs: Track traffic and pinpoint where it’s blocked.

- Reachability Analyzer: Simulate traffic paths to identify issues.

By systematically reviewing these areas, you can resolve VPC endpoint connectivity problems effectively while maintaining secure and reliable access to AWS services.

Check Your Environment Setup

Before diving into complex troubleshooting, take a moment to review your VPC's network settings. Many connectivity problems can be traced back to simple misconfigurations in subnet associations or route tables. By confirming these basics, you can often eliminate common setup issues right away.

Verify Subnet Associations and Route Table Configurations

Each VPC subnet must be linked to a single route table, which dictates how its traffic is directed. Keep in mind that a subnet can only associate with one route table at a time.

When you create Gateway VPC Endpoints, they automatically add routes to the linked subnet route tables. These routes use the AWS service prefix list ID (e.g., pl-xxxxxxxx) and direct traffic to the VPC endpoint. Double-check that these routes are present in your route tables. If they’re missing, it could point to a configuration error or changes made after the endpoint was set up.

Common Connectivity Problems

When dealing with VPC endpoint connectivity, issues often boil down to three key areas: security configurations, network routing, and resource limitations. Gaining a solid grasp of these common trouble spots can help you quickly pinpoint the root cause and apply an effective fix.

Security Groups and NACLs Configuration Errors

Security groups and Network Access Control Lists (NACLs) act as your VPC endpoints' gatekeepers. While security groups automatically allow return traffic, NACLs require explicit rules for both inbound and outbound traffic.

For Interface VPC Endpoints, security groups must allow HTTPS traffic on port 443 from the resources that need access to the endpoint. This means ensuring the security group tied to the VPC endpoint permits inbound traffic from EC2 instances or other AWS resources.

A common pitfall is overly restrictive NACL rules. Because NACLs process rules in numerical order, a deny rule with a lower number will override an allow rule with a higher number. For instance, if you have a deny rule numbered 100 for all traffic and an allow rule numbered 200 for HTTPS, the deny rule will block the traffic.

Additionally, NACLs need to allow ephemeral ports. When an application initiates a connection to a VPC endpoint, the return traffic uses ephemeral ports - typically in the range of 32768–65535 for Linux or 49152–65535 for Windows. Without proper outbound rules for these port ranges, connections may fail.

Route Table and DNS Problems

Routing and DNS misconfigurations are another common source of connectivity problems. Interface VPC Endpoints rely on DNS resolution to function. When private DNS is enabled for an Interface VPC Endpoint, private hosted zone entries override the public DNS names for AWS services. However, this setup only works if your VPC has DNS resolution and DNS hostnames enabled. Without these settings, applications might default to public IP addresses, bypassing the VPC endpoint entirely.

DNS issues can also cause inconsistent connectivity. For example, your application might sometimes resolve requests to a public IP address (via internet access) and other times to a private IP address associated with the VPC endpoint. If routing isn't configured correctly, this can lead to failed connections. Split-horizon DNS scenarios - such as when using custom DNS servers or connecting with on-premises networks - may further complicate matters, as DNS queries could resolve to public IPs, sending traffic through internet or NAT gateways instead of through the endpoint.

Elastic Network Interface (ENI) Limits

Resource limits, especially those related to Elastic Network Interfaces (ENIs), can also hinder connectivity. Interface VPC Endpoints create ENIs in your subnets, and exceeding the ENI quota can disrupt endpoint functionality. By default, a VPC supports up to 5,000 ENIs, but this total includes ENIs from EC2 instances, load balancers, RDS instances, and other AWS services. In large setups with multiple VPC endpoints and instances, hitting this limit is easier than you might think.

Each Interface VPC Endpoint deploys one ENI per Availability Zone. For example, if you deploy endpoints for several services across three Availability Zones, each service will create an ENI in every zone. With multiple services in use, the number of ENIs can add up quickly. It's worth noting that Gateway endpoints (used for S3 and DynamoDB) do not create ENIs.

Instance-level ENI limits can also cause connectivity problems. For instance, a t3.micro instance supports only 2 network interfaces, while a t3.medium supports 3. If your application requires additional ENIs for other purposes, you could exceed the instance's ENI limit, leading to connectivity failures.

Step-by-Step Troubleshooting Process

When your VPC endpoint connectivity hits a snag, following a structured troubleshooting process can save you time and effort. Start with the basics like checking the endpoint's status, and then move on to routing, security settings, and DNS configurations. This step-by-step approach helps pinpoint the issue and get things back on track.

Check Endpoint Status Using AWS CLI or Console

Begin by ensuring the VPC endpoint itself is working as expected. Its status can help you determine whether the problem lies with the endpoint configuration or elsewhere in your network.

To check the status using the AWS Management Console, go to the Amazon VPC console at https://console.aws.amazon.com/vpc/. Select Endpoints from the navigation pane and look at the Status column. If the status is anything other than "Available" (e.g., "Pending" or "Rejected"), the issue likely originates with the endpoint itself.

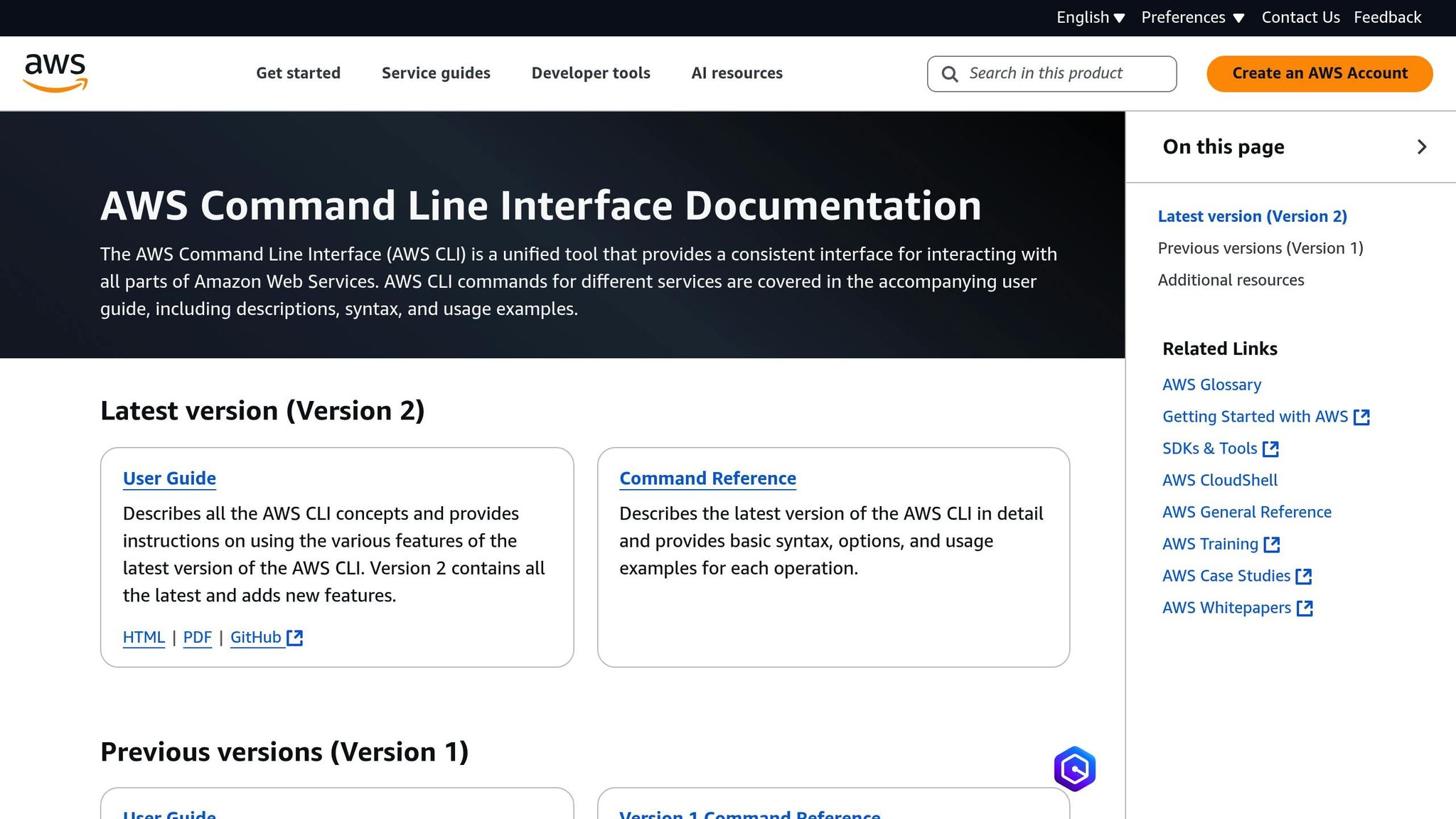

For a more detailed view, use the AWS CLI:

aws ec2 describe-vpc-endpoints

The State field in the output (e.g., available, pending, failed, rejected) provides quick insights. To focus on a specific endpoint, use its ID:

aws ec2 describe-vpc-endpoints --vpc-endpoint-ids vpce-032a826a

If the state shows as failed, check the LastError and FailureReason fields in the output for details. You can also filter endpoints by state to identify failed ones:

aws ec2 describe-vpc-endpoints --filters Name=vpc-endpoint-state,Values=failed

Verify Route Tables and Network Path

Once the endpoint status is confirmed, check the routing configuration to ensure traffic is directed properly. For Gateway endpoints (e.g., S3, DynamoDB), make sure your route tables include routes with the service prefix list as the destination and the VPC endpoint ID as the target. Missing or incorrect routes can cause traffic to bypass the endpoint and go through a NAT or internet gateway instead.

For Interface endpoints, confirm that traffic can reach the endpoint's Elastic Network Interfaces (ENIs). Ensure your subnets have route tables allowing local VPC traffic and that no custom route tables inadvertently block access to the endpoint's private IPs. Be mindful of overlapping routes - if both a NAT gateway and a VPC endpoint route exist for the same service, the more specific route takes priority.

Review Security Groups, NACLs, and Logs

Check the security settings for both the VPC endpoint and the resources trying to connect to it.

For Interface endpoints, the security group attached to the endpoint must allow inbound HTTPS traffic on port 443 from your source resources. Similarly, the security groups for your EC2 instances or other clients should permit outbound HTTPS traffic to the endpoint.

Network Access Control Lists (NACLs) are stateless, so ensure they allow both inbound HTTPS traffic and outbound traffic on the ephemeral port ranges (commonly 32,768–65,535 for Linux and 49,152–65,535 for Windows). A common mistake is permitting outbound connections but blocking return traffic on these high-numbered ports.

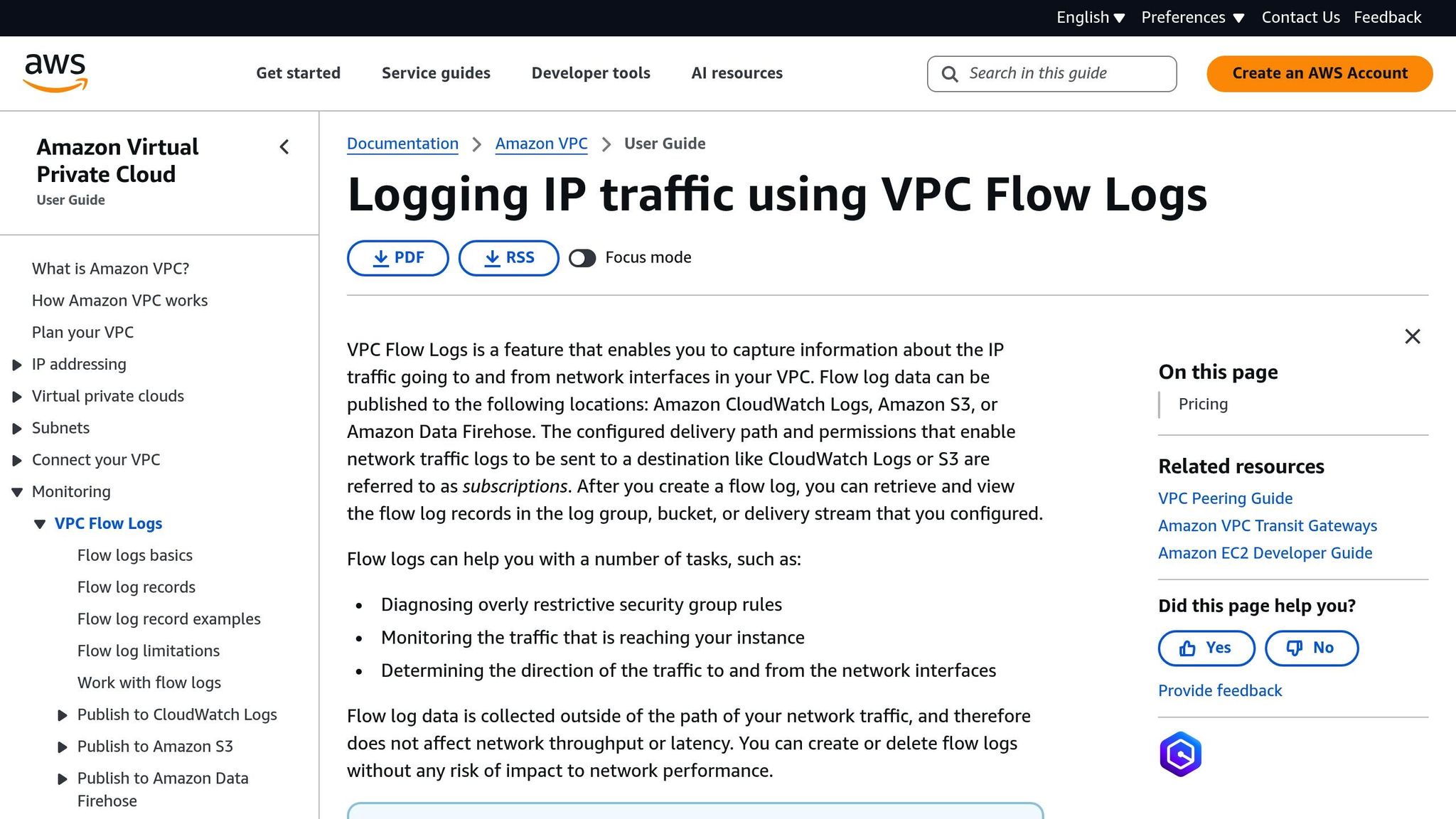

Enable VPC Flow Logs to capture details about accepted and rejected traffic. Look for REJECT entries related to your connection attempts. Additionally, monitor metrics like PacketDropCount and ErrorCount in CloudWatch to identify capacity or network issues.

Test Connectivity from an EC2 Instance

Testing directly from an EC2 instance in the same subnet as your VPC endpoint can help isolate network path issues. Use tools like telnet or nc (netcat) to check basic connectivity:

telnet vpce-0f89a33420c1931d7-abcdefgh.elasticloadbalancing.us-east-1.vpce.amazonaws.com 443

A successful connection indicates that routing and security configurations are correct. If you get a timeout, it might point to blocked traffic in security groups or NACLs. A "connection refused" error could mean DNS or routing problems.

For additional testing, use AWS CLI commands. For example, to test an S3 Gateway endpoint:

aws s3 ls --region us-east-1

Adding the --debug flag reveals the IP addresses being used. If public IPs show up instead of private ones, your endpoint may not be configured properly.

Another handy tool is curl, which can test HTTP/HTTPS connectivity and provide detailed error messages:

curl -v https://s3.us-east-1.amazonaws.com

The verbose output shows DNS resolution, connection setup, and SSL/TLS handshakes. Ensure the resolved IPs fall within your VPC's private IP range when using Interface endpoints.

If these tests don’t resolve the issue, it’s time to check for DNS problems.

Fix DNS Resolution Issues

DNS misconfigurations can lead to unreliable behavior, where applications sometimes use the VPC endpoint and other times default to public routes. For Interface endpoints, private DNS settings create private hosted zone entries that override public DNS names.

Ensure your VPC has DNS resolution and DNS hostnames enabled. Without these, private DNS won’t work, and applications may connect via public IPs. Test DNS resolution with:

nslookup s3.us-east-1.amazonaws.com

The output should show private IPs from your VPC’s CIDR range. If public IPs appear, double-check your VPC's DNS settings and the endpoint's private DNS configuration.

If you’re using custom DNS servers (e.g., on-premises or third-party), make sure they can resolve AWS private hosted zones. Set up DNS forwarding to the VPC’s default DNS resolver (usually the VPC CIDR base address +2, like 10.0.0.2 for a 10.0.0.0/16 VPC).

For scenarios involving split-horizon DNS - like accessing AWS services from on-premises networks via VPN or Direct Connect - ensure DNS queries for AWS domains are forwarded to the VPC’s DNS resolver. You might need conditional forwarding rules or DNS overrides for specific AWS service domains to make this work smoothly.

sbb-itb-6210c22

Advanced Troubleshooting Methods

Sometimes, basic troubleshooting steps just don’t cut it when dealing with VPC endpoint connectivity issues. For more complex problems - like intermittent outages or resource limitations - advanced tools and configuration tweaks can help you dig deeper. Below, we’ll explore some effective methods to identify and resolve these tougher challenges.

Use VPC Flow Logs and Reachability Analyzer

VPC Flow Logs are an excellent tool for tracking network traffic and spotting connectivity issues. You can enable them at the VPC, subnet, or network interface level to monitor traffic patterns. Focus on the action field in the logs: entries marked as ACCEPT indicate successful connections, while REJECT entries highlight where traffic is being blocked.

To troubleshoot endpoint issues, filter Flow Logs using the private IPs of the VPC endpoint. If you see rejected connections, it could be due to restrictive security group rules or network ACLs blocking the traffic.

Another invaluable tool is the VPC Reachability Analyzer, which simulates traffic flows and maps the entire network path - from your source EC2 instance to the endpoint’s ENI. It evaluates components like route tables, security groups, and NACLs. If the path is unreachable, the analyzer will identify the specific issue, such as a missing route or a restrictive security group rule.

For Interface endpoints, keep in mind that many applications use high-numbered ephemeral ports for outbound connections. If your NACL rules are too restrictive, they might block return traffic on these ports, causing connectivity issues.

Fix ENI Limit Issues

Every VPC endpoint uses Elastic Network Interfaces (ENIs) in your VPC. If you’ve hit your ENI quota in a region, new endpoints might fail to create or show a "failed" state. To check your current ENI usage, head to the Network Interfaces section in the Amazon EC2 console. Look for unused ENIs that you can safely delete - these sometimes linger after instances are terminated or resources are decommissioned.

"Your quota for elastic network interfaces increases automatically as new instances are launched on the account." – AWS re:Post

If you need more ENIs than your quota allows, you can request an increase through the AWS Service Quotas console. Search for "Amazon Virtual Private Cloud (Amazon VPC)" and select "Network Interfaces per Region" to submit your request. Additionally, note that each VPC supports up to 100 endpoints. If you’re nearing this limit, contact AWS Support to explore your options.

Configure Ephemeral Port Ranges in NACLs

Network ACLs (NACLs) are stateless, meaning they don’t automatically allow return traffic for outbound connections. This can cause problems with ephemeral ports - random high-numbered ports used for outbound traffic.

To avoid issues, make sure your NACLs allow outbound HTTPS (port 443) and the necessary ephemeral port ranges for your operating system. A common misstep is allowing HTTPS traffic while inadvertently blocking the return ports, which can lead to failed or hanging connections.

Review the NACL rules for the subnets containing both your client resources and the VPC endpoint ENIs. If you suspect NACL restrictions are the problem, temporarily allow a broader port range - like 1,024–65,535 - to test connectivity. Once you’ve identified the issue, tighten the rules to permit only the required ports. Also, keep in mind the protocol requirements: most VPC endpoint traffic relies on TCP, but certain scenarios, like DNS lookups or health checks, might require UDP as well.

Conclusion

When troubleshooting VPC endpoints, focus on verifying deployment, reviewing network settings, and using diagnostic tools. These steps form the backbone of an effective troubleshooting process.

Start by checking network configurations. Issues with route tables, security groups, or NACLs are often behind connectivity problems. Even a properly deployed endpoint can fail if the network setup blocks essential traffic. Confirm the endpoint’s status, then systematically review route tables, security groups, and NACLs to ensure everything is configured correctly.

Diagnostic tools can be a game-changer. VPC Flow Logs help you track traffic patterns, while the Reachability Analyzer maps network paths to identify where traffic is being blocked. To validate your findings, test connectivity from an EC2 instance within the same subnet as the endpoint.

Proactive monitoring is key to avoiding future issues. VPC Flow Logs not only help you spot dropped packets or misrouted traffic but also provide insights into traffic trends that could signal resource constraints, like ENI quota limits, before they escalate. Regularly monitoring these logs can save you from unexpected disruptions.

Finally, don’t overlook DNS resolution. Misconfigured DNS settings can reroute traffic through public paths, undermining your setup. Always test name resolution from within your VPC as part of the troubleshooting process. By addressing these areas, you can resolve issues efficiently and maintain a reliable VPC environment.

FAQs

What are the common signs of a VPC endpoint connectivity issue?

Here are some common signs that might indicate connectivity problems with a VPC endpoint:

- DNS resolution failures when trying to connect to the endpoint.

- The endpoint's status in the AWS Management Console shows something other than 'Available'.

- You experience timeouts or errors while attempting to access the associated service.

- Misconfigurations in the route table or security group settings.

If any of these issues arise, it's worth double-checking your VPC endpoint configuration and related resources to pinpoint and address the problem.

How can I use AWS tools like Reachability Analyzer and VPC Flow Logs to troubleshoot VPC endpoint connectivity issues?

To address VPC endpoint connectivity issues, begin with the AWS Reachability Analyzer. This tool examines network paths to spot potential misconfigurations in security groups, route tables, or network ACLs. It’s a practical way to uncover where connectivity might be failing.

Another useful resource is VPC Flow Logs, which offer detailed information about IP traffic within your VPC. By analyzing these logs, you can identify unusual patterns or pinpoint where traffic may be dropped due to configuration errors. Using these tools together provides a comprehensive method for diagnosing and fixing connectivity challenges in your AWS setup.

What should I do if I think my DNS settings are causing traffic to bypass my VPC endpoint?

If you think DNS settings might be causing traffic to bypass your VPC endpoint, the first step is to ensure that private DNS is enabled on your endpoint. Next, review your VPC settings to confirm that DNS resolution is turned on for your resources. This ensures that DNS requests are routed correctly to the private IP addresses of your VPC endpoint.

To troubleshoot, tools like nslookup can be handy for testing DNS resolution. Use it to verify that DNS queries are resolving to the correct endpoint. If you're using custom DNS records, take a closer look to make sure they're properly set up to route traffic to the VPC endpoint. Incorrectly configured DNS records are a common reason for traffic bypassing the intended path.