Most likely everyone will agree that getting started with a complex cloud data warehouse like Amazon Redshift can be an intimidating process.

Well, this in-depth step-by-step guide aims to make that process straightforward for complete beginners by walking through the initial setup and configuration from the ground up.

You'll learn core Redshift terminology, create an AWS account, launch your first cluster, load data, run queries, monitor performance, and wrap things up by cleaning up resources - all tailored specifically to help first-timers get up and running with this powerful cloud data warehousing solution.

Introduction to Amazon Redshift for Beginners

Amazon Redshift is a fully managed, petabyte-scale data warehouse service designed for big data analytics. As a cloud data warehouse, Redshift enables companies to leverage the scale and flexibility of the cloud to perform high-performance analytics at a lower cost compared to traditional on-premises data warehouses.

Exploring What is Amazon Redshift?

Amazon Redshift provides a SQL interface to query petabytes of structured and semi-structured data across data processing, machine learning, and data warehousing workloads. Key capabilities include:

- Scalable compute and storage: Redshift allows you to elastically scale up or down cluster capacity to meet analytical needs. It manages the underlying infrastructure.

- Massively parallel processing (MPP): Redshift distributes data and query load across multiple nodes for fast performance.

- Columnar data storage: Instead of storing data rows, Redshift organizes data by columns for higher compression and better query performance.

- Advanced compression: Redshift employs multiple compression techniques to optimize storage and minimize I/O. Compression ratios of 2-4x are common.

- Integration with data lakes: Redshift Spectrum allows low-cost queries across exabytes of unstructured data in S3 without any data movement.

As a fully managed service, Redshift removes infrastructure management tasks so teams can focus on analyzing data to drive insights.

The Advantages of Using a Data Warehouse System

Key benefits of using a cloud data warehouse like Amazon Redshift include:

- Cost savings - Pay only for the resources used instead of unused on-premises capacity. Redshift storage and compute can scale independently.

- Performance at scale - Leverage MPP architecture and columnar storage for fast queries across petabytes of data.

- Elasticity - Scale cluster capacity up or down easily. No waiting for infrastructure.

- Data lake integration - Directly query vast amounts of raw data in S3 without any data movement using Redshift Spectrum.

Setting Tutorial Expectations and Goals

By the end of this beginner Redshift tutorial you will understand:

- The architecture and components of Amazon Redshift

- How to launch a Redshift cluster and connect to it

- How to load sample data sets into Redshift tables

- How to perform basic SQL queries in Redshift

These skills will provide a foundation for building performant analytics pipelines leveraging Amazon Redshift at scale.

Defining Key Amazon Redshift Terminology

- Cluster - The primary Redshift resource consisting of leader and compute nodes. Manages and executes queries.

- Node - The individual server/instance that stores data and performs computations as part of a cluster.

- Leader Node - The node that manages client connections and receives queries to execute across the cluster.

- Compute Node - Nodes that store data and perform queries and computations.

- Columnar Storage - Data is stored sequentially by column rather than row to optimize analytics performance.

How do I get started with Redshift?

Getting started with Amazon Redshift requires a few key steps:

Create an AWS Account

If you don't already have one, sign up for an AWS account. This will allow you to access AWS services like Amazon Redshift.

Launch a Redshift Cluster

Use the AWS Management Console to launch your first Redshift cluster. You'll need to configure settings like node type, number of nodes, and database credentials.

Configure Access

Set up inbound rules in your cluster's Security Group to allow access from your local machine or applications. By default, a Redshift cluster blocks all incoming connections.

Connect a SQL Client

Install a SQL client like SQL Workbench/J to connect to your Redshift cluster. Use the endpoint and database credentials to log in.

Load Data

Use the COPY command to load data from Amazon S3 buckets into your Redshift tables. This makes data available for querying.

Query Data

Use SQL commands through your SQL client to start querying the data loaded into Amazon Redshift. Experiment with example queries to understand Redshift performance.

With these basic steps, you can get started querying data in Amazon Redshift. As you work with larger data sets and more complex queries, make sure to monitor query performance and optimize table design.

What is Redshift for dummies?

Redshift is Amazon's fully managed, petabyte-scale data warehouse service built for the cloud. As a beginner, there are a few key things to understand about Redshift:

-

Data Warehouse - Redshift is optimized for analytic workloads, like reporting, dashboards, and aggregating large data sets. It stores data in a columnar format for fast queries.

-

Cloud-based - Redshift runs on AWS infrastructure and scales storage and compute separately. You don't manage any servers or infrastructure.

-

SQL Interface - You interact with Redshift via standard SQL for querying, loading data, and managing users/permissions. No specialized skills needed.

-

Cost-effective - You only pay for the resources provisioned. Redshift provides great performance per dollar spent compared to on-prem data warehouses.

-

Fast Queries - Redshift uses advanced query optimization, columnar storage, and MPP architecture to deliver very fast queries, even on huge data sets.

To get started with Redshift as a beginner, the basic steps are:

- Sign up for an AWS account

- Launch a Redshift cluster and configure access

- Load data from S3 buckets using COPY command

- Start querying data with SQL

The key things to remember are that Redshift simplifies data warehousing by automating the infrastructure. You interact through standard SQL. Redshift makes it easy to get started analyzing big data in the cloud.

Is Amazon Redshift easy to use?

Amazon Redshift is designed to be easy to use for those familiar with SQL and data warehousing concepts. Here are some of the key features that make Amazon Redshift beginner-friendly:

Simple Setup

- Creating a Redshift cluster takes just a few clicks in the AWS console. You specify the node type, number of nodes, and database credentials.

- The AWS infrastructure handles provisioning servers, storage, networking, installation, configuration and ongoing management.

- You don't need to manage any hardware or databases yourself.

Familiar SQL Interface

- Amazon Redshift uses standard SQL for working with data, so you can use existing SQL skills.

- It works with common SQL clients and business intelligence tools like Tableau, Looker, and Power BI.

- You can query other data sources using Redshift Spectrum and federated queries.

Automatic Management

- Redshift handles backups, patching, upgrades, monitoring, failure recovery, and other administrative tasks automatically.

- You don't need a dedicated DBA to manage the system.

Performance Optimization

- Redshift has a massively parallel processing architecture that optimizes queries to run fast on large data sets.

- It manages the distribution of data across nodes and optimizes query execution plans.

So in summary, Amazon Redshift makes it easy for developers and analysts familiar with SQL to start using a petabyte-scale data warehouse. The AWS infrastructure and services take care of the deployment, management, tuning and scaling of the system. You just load data and start querying using standard SQL syntax. This simplicity and automation allows you to focus on analyzing data rather than managing infrastructure.

Is Redshift an ETL tool?

No, Amazon Redshift is not an ETL tool. It is a cloud data warehouse that enables fast queries using standard SQL. However, Redshift can integrate with ETL tools as part of a data pipeline.

Here are some key differences between ETL tools and Amazon Redshift:

- ETL Tools

- Used for extracting, transforming and loading data from sources into data warehouses

- Handle data preparation and movement

- Examples: AWS Glue, AWS Data Pipeline, Talend, Informatica

- Amazon Redshift

- A cloud data warehouse optimized for analytics

- Enables fast SQL queries on large datasets

- Requires data to be loaded and transformed before querying

- Integrates with ETL tools as destination data store

While Redshift has COPY and UNLOAD commands to load and extract data, it does not have native data transformation features. ETL tools typically perform the extract, transform and load processes before the data is available for analytics in Redshift.

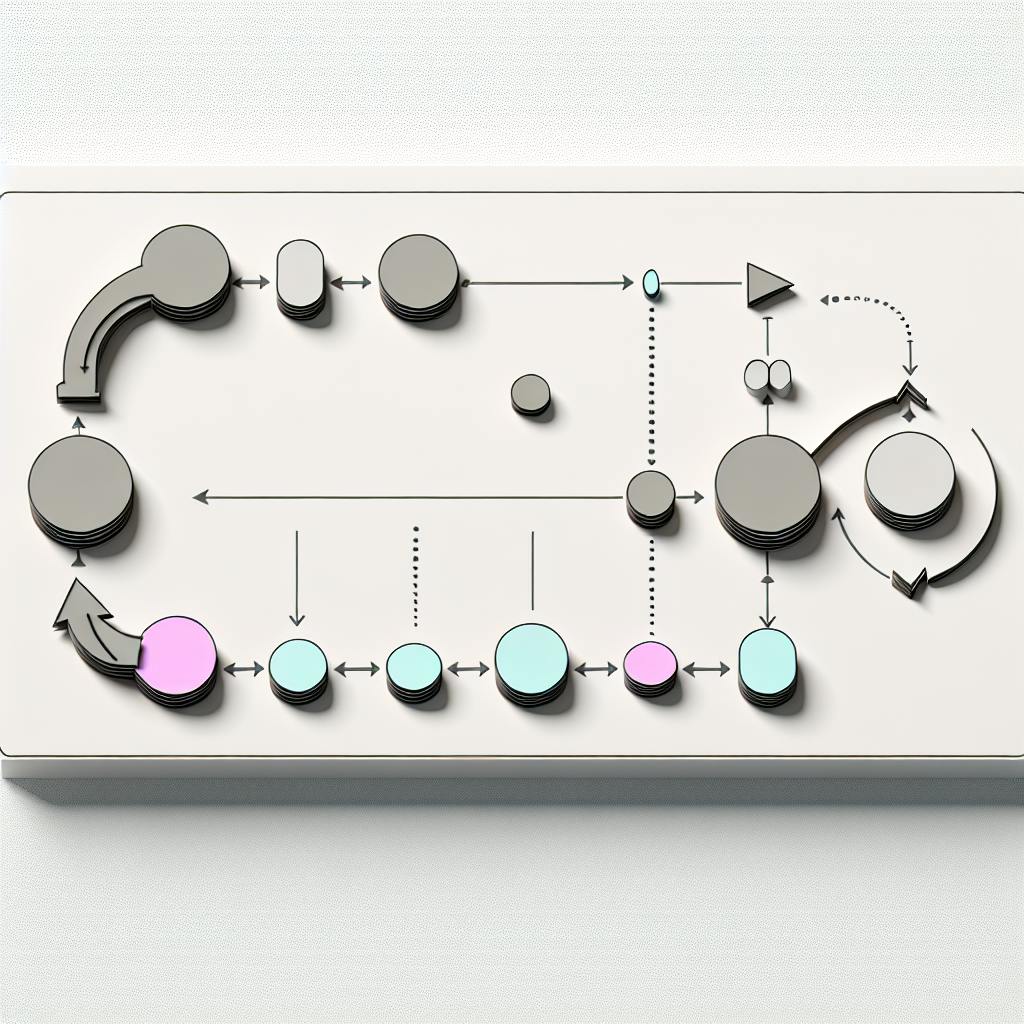

Common data pipeline architecture:

- ETL tool extracts data from sources

- Transforms and cleans data

- Loads processed data into Redshift

- Redshift stores data and enables fast SQL queries for analysis

So in summary, ETL tools feed data into Amazon Redshift, which serves as a data warehouse. Redshift provides the performance and architecture for analytics, while ETL handles the data preparation required first. The two technologies complement each other in modern data pipelines.

sbb-itb-6210c22

Creating an Amazon Web Services Account

How to Create an AWS Account

To get started with Amazon Redshift, you first need to create an AWS account if you don't already have one. Here are the steps:

- Go to aws.amazon.com and click on "Create an AWS Account".

- Follow the on-screen instructions to sign-up. You'll need to provide contact and payment information.

- Once your account is created, you'll be directed to the AWS Management Console. Take note of your account ID, as you'll need this later.

And that's it - you now have access to all AWS services, including Amazon Redshift.

Understanding AWS Redshift Documentation

As a beginner, it's important to familiarize yourself with the official AWS Redshift documentation. Here you'll find:

- In-depth guides on getting started, cluster management, loading data, etc.

- API reference documentation for developers

- Best practices, tips, and examples

- Answers to frequently asked questions

Be sure to bookmark and reference the docs as you work through this tutorial and build your first Redshift cluster. Pay special attention to the Getting Started section.

Amazon Redshift Free Trial: Getting Started

Amazon Redshift offers a free trial so you can explore the service at no cost. Specifically, new AWS accounts get:

- 750 hours of Redshift cluster usage per month for 2 months

- 128GB of storage for backup and restore usage per month for 2 months

To take advantage of this:

- Make sure you use a new AWS account created after November 1, 2020.

- Launch a Redshift cluster within 30 days of creating your account.

With the free trial, you can follow this beginner's tutorial, load test data, run queries, and more without worrying about costs.

Preparing for AWS Certified Data Analytics – Specialty

This beginner's tutorial touches on aspects of Amazon Redshift that are covered in the AWS Certified Data Analytics - Specialty certification exam, including:

- Initial account setup

- Cluster launching and configuration

- Loading data

- Running queries

So going through this tutorial helps lay the foundation if you're interested in pursuing official AWS Redshift training and certification later on.

Launching an Amazon Redshift Cluster

Launching an Amazon Redshift cluster is the first step to setting up your data warehouse. There are a few key things to consider when launching your first cluster:

Steps to Launch an Amazon Redshift Cluster

Follow these steps to launch your first Amazon Redshift cluster:

-

Sign in to the AWS Management Console and open the Amazon Redshift console. If you don't already have an AWS account, you'll need to create one first.

-

Click "Create cluster" to start the cluster creation workflow.

-

Select the type and number of nodes for your cluster. Consider starting with a single-node cluster for testing purposes.

-

Configure the database name, database port, master user name, and master user password.

-

For cluster permissions, choose the default option to allow access from resources and accounts you own.

-

Select your preferred VPC and subnet from the network and security options.

-

Leave the database encryption option enabled for security.

-

Click "Create cluster" to launch your Amazon Redshift cluster. It can take several minutes for the cluster to finish being created.

Amazon Redshift Clusters: Configuration and Management

There are a few key configuration options to understand when setting up an Amazon Redshift cluster:

-

Node type - Select the right node type based on your workload requirements. Dense compute and dense storage nodes optimize compute and storage resources respectively.

-

Number of nodes - Start with a single node for testing, then scale up to a multi-node cluster for production workloads.

-

Cluster parameters - Adjust memory allocation, concurrency, and other settings to tune cluster performance.

Ongoing tasks like monitoring system health, maintaining security settings, backing up data, and updating to new versions are also important for cluster management.

Connecting to Your Amazon Redshift Cluster

Once your Amazon Redshift cluster is available, you can connect to it using:

-

The query editor in the AWS Management Console.

-

SQL client tools like SQL Workbench/J or DBeaver.

-

Business intelligence and data visualization tools like Tableau, Looker, or Quicksight.

To connect, you'll need the cluster endpoint and database credentials set during cluster creation.

Introduction to Amazon Redshift Serverless

Amazon Redshift Serverless allows you to run SQL queries against your data in Amazon S3 without having to manage clusters. It auto-scales query processing to meet workload needs. Key features include:

-

No cluster management - Redshift Serverless handles provisioning resources for queries.

-

Auto scaling - Processing power scales automatically based on query demand.

-

Pay per query pricing - You only pay for the queries you run.

For low-administration workloads, Redshift Serverless offers a fully hosted data warehousing option.

Amazon Redshift Data Loading Techniques

Amazon Redshift provides a variety of techniques for efficiently loading data into the data warehouse. Understanding these methods is key for optimizing data ingestion.

Amazon Redshift Loading Data: Understanding the COPY Command

The COPY command is the most efficient way to load data into Amazon Redshift. It allows loading data in parallel from Amazon S3, Amazon EMR, or any SSH-enabled host.

Key benefits of using COPY for data loading:

-

Parallel data loading: COPY loads data in parallel using all nodes in the cluster, making it much faster than using INSERT statements.

-

Integration with AWS data sources: COPY can directly load data from S3, EMR, or DynamoDB, avoiding extra data transfer steps.

-

Data format flexibility: COPY supports loading CSV, JSON, AVRO, ORC, Parquet and other data formats.

-

Automation capabilities: COPY can be executed from scripts to automate recurring loads.

To use COPY efficiently:

- Use a manifest file for tracking large data sets in S3.

- Compress data files during loads to increase transfer speeds.

- Load data in sorted order according to the sort key of the target table.

Optimizing Amazon Redshift Table Design for Data Loading

Careful table design ensures efficient data loading in Amazon Redshift:

-

Choose optimal sort and distribution keys based on query patterns to minimize data movement during loads and queries.

-

Use columnar storage for data warehouse workloads through defining columns appropriately. Allows only relevant columns to be read.

-

Encode and compress columns properly based on data patterns to reduce storage needs.

-

Define primary key and foreign key constraints to optimize joins and ensure referential integrity during incremental data loads.

Properly designing tables upfront alleviates performance bottlenecks for loading and querying data down the road.

Automating Data Loading with AWS Services

AWS services can be used to automate loading data into Amazon Redshift:

-

AWS Lambda functions can execute COPY commands on a schedule. Useful for automating incremental loads from S3.

-

AWS Data Pipeline enables creating data workflows that can orchestrate moving data from sources like DynamoDB into Amazon Redshift automatically.

-

AWS Glue Crawler can crawl data sources and update AWS Glue Data Catalog, which is used by Amazon Redshift Spectrum to create external tables.

Automation eliminates manual tasks and ensures data is loaded reliably at regular intervals.

Handling Amazon Redshift Data Types During Loading

When loading data into Amazon Redshift tables, care should be taken to map source data types appropriately:

-

Map timestamp data types accurately to enable proper filtering and range comparisons.

-

Define numeric data types to avoid overflow errors and unnecessary precision.

-

Use VARCHAR vs CHAR data types to conserve storage space for variable length text data.

-

Use GEOMETRY data type for spatial data to enable geospatial queries.

Proper data type handling maintains data integrity and enables optimal query performance during analysis.

Querying and Analyzing Data in Amazon Redshift

Amazon Redshift provides a powerful SQL interface for querying and analyzing the data in your data warehouse. As a fully managed data warehouse service, Redshift makes it easy to execute complex analytic queries across your data using standard SQL syntax.

In this section, we'll explore some of the key capabilities for querying and analyzing data in Redshift.

Utilizing the Amazon Redshift Query Editor

The Amazon Redshift Query Editor provides a convenient interface directly within the AWS Management Console to write and execute SQL queries against your Redshift data warehouse.

Some key features of the Redshift Query Editor include:

- Intelligent auto-complete and syntax highlighting to help compose SQL queries.

- Ability to run queries and view, filter, and download results sets.

- Query history to access previously run queries.

- Integrated visualization tools to visualize query result sets.

The Query Editor is a great way to directly test and prototype SQL logic against your Redshift schema without needing additional tools. It's perfect for ad-hoc analysis.

Advanced Query Techniques: Joins, Subqueries, and More

Amazon Redshift supports complex SQL capabilities like:

- Joins - Combine data from multiple tables together.

- Subqueries - Nest queries within other queries.

- Common table expressions (CTEs) - Temporary result sets for use within a query.

- Window functions - Apply calculations across sets of rows.

Using features like these allows creating sophisticated data transformations and analysis with Redshift SQL.

Some examples include:

- Join customer data from multiple tables to perform a detailed segmentation analysis.

- Use subqueries and CTEs to build modular, reusable query components.

- Calculate running totals and differences across rows using window functions.

Redshift's SQL dialect provides extensive flexibility.

Amazon Redshift Federated Query: Querying Outside Data Sources

Federated Query allows executing SQL queries across data stored in Amazon Redshift together with data stored in external databases like Amazon RDS, Amazon Aurora, and Amazon DynamoDB.

This provides a single interface to query across data warehouses and databases. Some use cases include:

- Query live transactional systems while also leveraging your Redshift historical data.

- Migrate data between sources or combine disparate sources together.

- Unify analytics across data siloes with a "single pane of glass" using SQL.

Monitoring and Optimizing Query Performance

As the volume of your data and the complexity of queries increases, tuning and optimizing query performance becomes crucial. Redshift provides detailed metrics about query execution, duration, and system utilization over time.

Best practices for optimizing Redshift query performance include:

- Analyzing slow running queries and queries that consume significant I/O or memory.

- Comparing estimated and actual query execution times to identify inaccurate query plans.

- Improving table and index designs based on query patterns.

- Leveraging Redshift's Workload Management (WLM) to allocate resources.

Careful monitoring combined with performance tuning and optimization helps unlock Redshift’s full capabilities to deliver fast, interactive queries against your large and growing data.

Scaling and Managing Amazon Redshift Resources

Amazon Redshift provides several features to help scale and manage resources to optimize performance as your data warehouse grows.

Amazon Redshift Workload Management (WLM)

Workload Management (WLM) allows you to define query queues and allocate cluster resources to meet business needs. With WLM you can:

- Prioritize queries by assigning them to different queues

- Allocate cluster memory and concurrency to queues to manage query performance

- Define query monitoring rules to route queries to queues automatically

- View real-time and historical query performance data to tune WLM settings

Some best practices for configuring WLM:

- Create separate queues for different query types or business groups

- Allocate more memory to high priority queues

- Set soft limits on query concurrency to prevent resource contention

- Continuously monitor and tune WLM configuration as needs change

Properly configuring WLM is crucial to prevent resource contention and maintain fast query performance as your data warehouse scales.

Compute Elasticity & Scalability with Concurrency Scaling

Concurrency Scaling automatically adds additional cluster capacity when you need it to handle an increase in concurrent read queries. Benefits include:

- Handles workload spikes without manual intervention

- Provides consistent query performance at any scale

- Only pay for additional capacity when it is added

- Easy to enable with no application changes required

Concurrency scaling allows your Redshift cluster to easily scale up and down to meet changing query demand. This prevents queries from queueing and maintains fast performance.

Amazon Redshift Performance: Tuning and Best Practices

There are several best practices to tune Amazon Redshift performance:

- Choose appropriate distribution styles and sorting keys

- Compress data with common column encoding schemes

- Analyze tables regularly to refresh statistics

- Tune WLM settings to allocate memory optimally

- Monitor system tables for insights into performance

Continuously monitoring query execution times, queue lengths, and system metrics is crucial. As your data warehouse scales, additional tuning may be required to maintain optimal performance.

Amazon Redshift Stored Procedures for Administrative Tasks

Amazon Redshift supports stored procedures for automating administrative tasks like:

- Loading data from S3

- Unloading result sets to S3

- Creating tables, views, indexes

- Updating table statistics

- Backup and restore

Stored procedures allow you to standardize and automate common data warehouse workflows. With proper permission controls, you can enable self-service capabilities for business users as well.

Using Amazon Redshift features like WLM, concurrency scaling, query monitoring, and stored procedures allows you to optimize, secure, and scale your data warehouse to meet evolving needs.

Conclusion: Wrapping Up Your AWS Redshift Journey

Recap of Amazon Redshift Fundamentals

Amazon Redshift is a fully managed data warehouse service that makes it simple and cost-effective to analyze all your data using standard SQL and your existing business intelligence tools. Some key fundamentals we covered in this beginner's tutorial include:

- Redshift architecture using a massively parallel processing (MPP) system

- Loading data into Redshift using the COPY command

- Table design and data types for optimal query performance

- Querying data with Redshift's SQL interface

By mastering these basics, you can easily get started with Redshift to create a scalable data warehouse.

Cleaning Up and Managing Costs

As you experiment with Redshift, make sure to delete any test clusters when you are done to avoid unnecessary charges. You can use features like automatic snapshots and concurrency scaling to optimize costs. Check the Billing Dashboard regularly to monitor usage.

Further Learning and AWS Redshift Training Resources

To continue advancing your Redshift skills, refer to the AWS Redshift documentation. AWS also offers self-paced labs and professional certification focused specifically on data analytics.

Exploring Next Steps in Your Data Analytics Journey

As you become more proficient with Redshift, consider expanding into more advanced data ingestion methods like AWS Glue ETL jobs. You can also integrate your new data warehouse with AWS analytics and machine learning services like Quicksight, SageMaker, and Athena.